Cheezam

The main idea

As a French person, I love myself a good cheese board at a restaurant. And from time to time, one cheese out of the selection is just celestial. In that case, I want to know what cheese it is - and to keep its info, to treat myself sometime in the future. I could just ask the restaurant personnel - right? Or, develop my own app so that if I take a picture of a delicious piece of cheese, the app tells me exactly what it is - kind of when you want to know what a piece of music is and you use Shazam.

Note: this was a school project conducted at IIT, but to be honest, I love the idea so much that I will probably improve this project over time and maybe develop an actual app in the future. I'll post any update here!

The dataset

The dataset is a custom-made dataset from images scraped from Google Images.

Scraping the images from Google

The images have been scraped from Google Image with the help of Python module google_images_download using queries whose keywords corresponded to the cheeses I wanted to include. In the state of things, the dataset comprises:

- Beaufort · 403 images

- Mozzarella · 288 images

- Comté · 441 images

- Morbier ·347 images

- Bleu d'Auvergne · 386 images

Unfortunately, at the time of this project, Google Images did not allow more than 400 images to be scraped at once - always taken from the top of the Google Images query, so multiple queries under the same keywords yielded identical images. Note that Beaufort and Comté are highlighted in the above list - because they're very similar looking cheeses (look it up - by just looking at them, it's hard to tell them apart). In fact, I chose those two because of that characteristic - I wanted to assess my classifier's performance on a dataset with all different cheeses and see if including a cheese that's similar to another changed the metrics.

Data augmentation

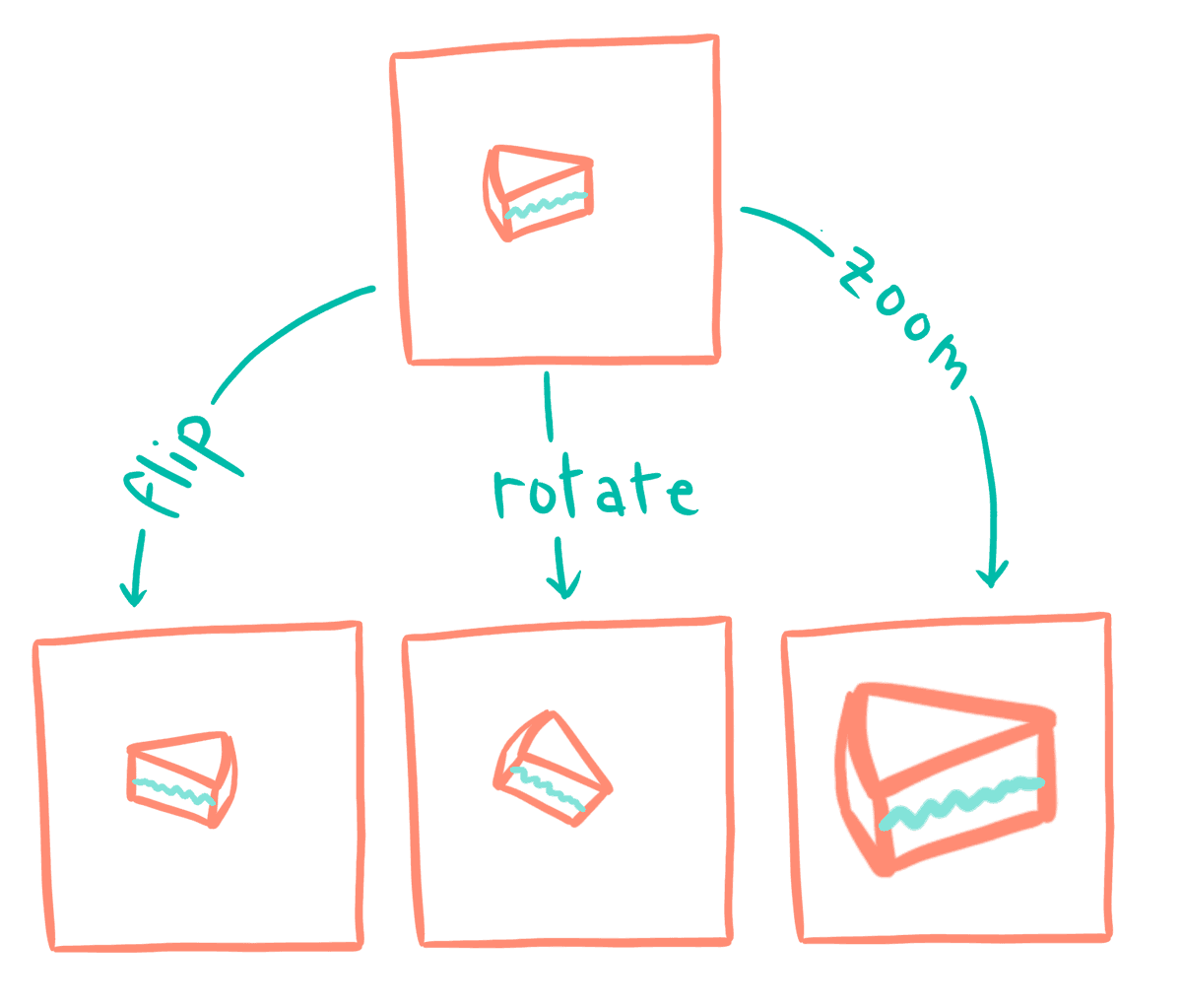

A few hundreds of images per cheese seemed too few to train a classifier - so I used data augmentation techniques to inflate my dataset. The images were subjected to:

- Random flip

- Random rotations

- Random zoom

Those operations were performed using TensorFlow Keras' preprocessing functions.

Classifier architectures

Multiple classifier architectures were used to train models and confront the results.

Base model

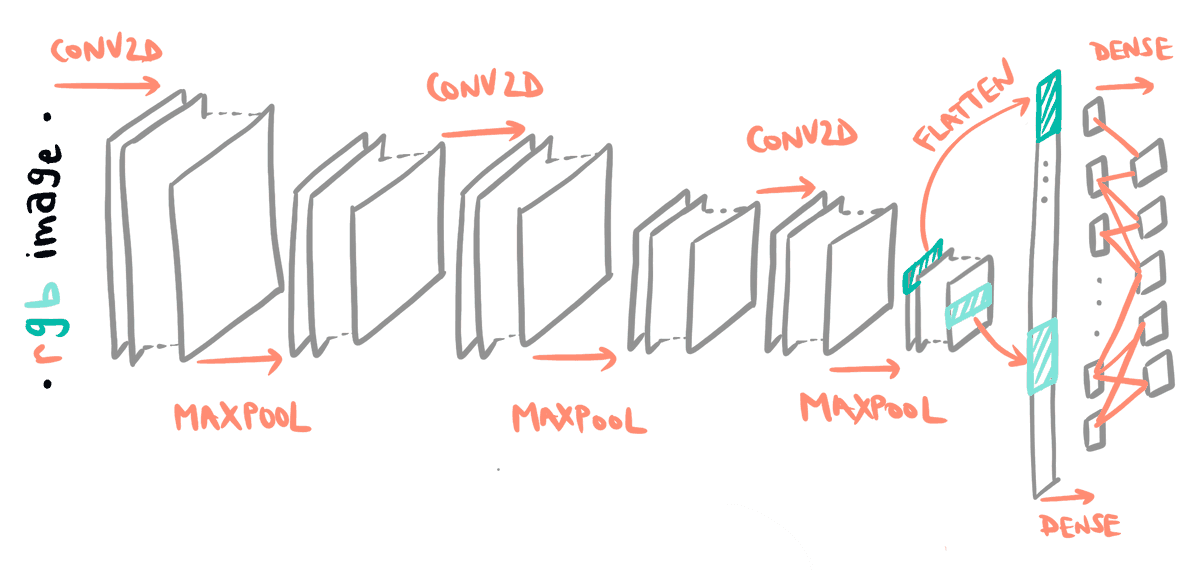

The base model has the following architecture:

| Layer | Output Shape | Activation |

|---|---|---|

| Rescaling | 256·256·3 | · |

| Conv2D | 256·256·32 | ReLu |

| MaxPooling | 128·128·32 | · |

| Conv2D | 128·128·32 | ReLu |

| MaxPooling | 64·64·32 | · |

| Conv2D | 64·64·32 | ReLu |

| MaxPooling | 32·32·32 | · |

| Flatten | 32768 | · |

| Dense | 128 | ReLu |

| Dense | 5 | Softmax |

Schematically, all the layers (omitting the rescaling layer) are represented on the following sktech:

The rescaling layer is only there because the initial images are RGB images with different sizes - but they all need to be the same shape (256·256·3) once fed as input to the classifier.

Base model with dropout

The base model is used, but with a few tweaks. Dropout layers are added after the second and third convolutional layers. These dropout layers randomly set inputs to 0 with a frequency of 0.4 (during training only). This helps avoiding the overfitting of our model - i.e. it prevents the model from picking up too many details from the training data that may not be generalizable.

Base model with dropout and data augmentation

Here once again, the base model is incremented with three new layers: RandomFlip, RandomRotation and RandomZoom , with parameters 'horizontal', 0.1 and 0.1 respectfully. Those layers are placed right after the resizing layer, and before the first convolution. This layer will provide artificial new images, by messing a bit with the images from the initial dataset. This way, the network is more robust to changes in size or in orientation of the cheeses. The operations performed can be visualized on the figure below - the rotate and zoom operations have been exaggerated for convenience:

VGG-16 with dropout and data augmentation

VGG-16 - with VGG standing for Visual Geometry Group - is a 16 layers deep convolutional neural network architecture. It was developed by K. Simonyan and A. Zisserman from the eponymous Visual Geometry Group of the University of Oxford, UK. This architecture, along with the VGG-19, scored very high in the localization and classification of the ILSVRC-2014, so that's why I chose it - more details on this conference paper. There's actually a lot of VGG-16 pre-trained implementations in different languages, but I decided to break it down and code it myself, since I did this projects to learn more about convolutional neural networks.

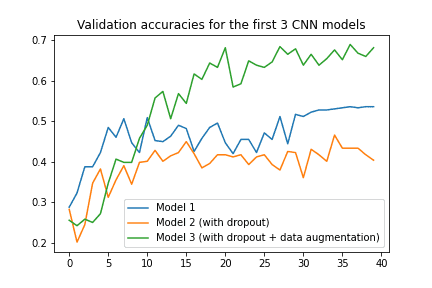

Classification results

The following graph shows the validation accuracies for the base model, its incrementation with dropout layers and its version with dropout and data augmentation. It is clear that the model using dropout layers and data augmentation technoiques performs better that the other two, after 40 training epochs. In fact, with very little time spent tuning the model, it reaches a validation accuracy of about 70% on a dataset with two quasi-identical cheeses.

I purposely chose not to include the VGG-16 results in this articles, as the current tuning of the model as can be seen on GitHub is not ideal. In fact, it barely reaches 60% validation accuracy on the state of things (though it reached above 80% when I submitted my project for grading) as I kept messing with the activation functions, dropout layers, batch normalization layers and so on and lost my working local copy. Stay tuned on GitHub for more recent updates!

Future work

More cheeses

The idea is to be able to classify most cheeses from pictures - and there are a LOT of them. But this requires me to build a more complete dataset, and in the state of things, will be difficult. Not only the number of images I can get from Google Images is limited to a few hundreds per cheese type, but some cheeses may be underdocumented. Also, the neural network will need to be tweaked a little to accomodate the higher number of labels.

An app?

The end goal for this project is to build an actual application to be used on phones, to classify cheeses on the fly (i.e when you are at a restaurant and want to know what kind of cheese is in your platter). This is 100% doable, but an issue to keep in mind is that cheeses on cheese platters are often cut in small slices - which means I probaly should use a dataset of sliced cheeses to train my classifier. However, this might be more challenging than classifying whole cheeses or bigger slices. In the end, all hinges on choosing the proper way to build the dataset.

Find this project on GitHub.